Text to avoid paywall

The Wikimedia Foundation, the nonprofit organization which hosts and develops Wikipedia, has paused an experiment that showed users AI-generated summaries at the top of articles after an overwhelmingly negative reaction from the Wikipedia editors community.

“Just because Google has rolled out its AI summaries doesn’t mean we need to one-up them, I sincerely beg you not to test this, on mobile or anywhere else,” one editor said in response to Wikimedia Foundation’s announcement that it will launch a two-week trial of the summaries on the mobile version of Wikipedia. “This would do immediate and irreversible harm to our readers and to our reputation as a decently trustworthy and serious source. Wikipedia has in some ways become a byword for sober boringness, which is excellent. Let’s not insult our readers’ intelligence and join the stampede to roll out flashy AI summaries. Which is what these are, although here the word ‘machine-generated’ is used instead.”

Two other editors simply commented, “Yuck.”

For years, Wikipedia has been one of the most valuable repositories of information in the world, and a laudable model for community-based, democratic internet platform governance. Its importance has only grown in the last couple of years during the generative AI boom as it’s one of the only internet platforms that has not been significantly degraded by the flood of AI-generated slop and misinformation. As opposed to Google, which since embracing generative AI has instructed its users to eat glue, Wikipedia’s community has kept its articles relatively high quality. As I recently reported last year, editors are actively working to filter out bad, AI-generated content from Wikipedia.

A page detailing the the AI-generated summaries project, called “Simple Article Summaries,” explains that it was proposed after a discussion at Wikimedia’s 2024 conference, Wikimania, where “Wikimedians discussed ways that AI/machine-generated remixing of the already created content can be used to make Wikipedia more accessible and easier to learn from.” Editors who participated in the discussion thought that these summaries could improve the learning experience on Wikipedia, where some article summaries can be quite dense and filled with technical jargon, but that AI features needed to be cleared labeled as such and that users needed an easy to way to flag issues with “machine-generated/remixed content once it was published or generated automatically.”

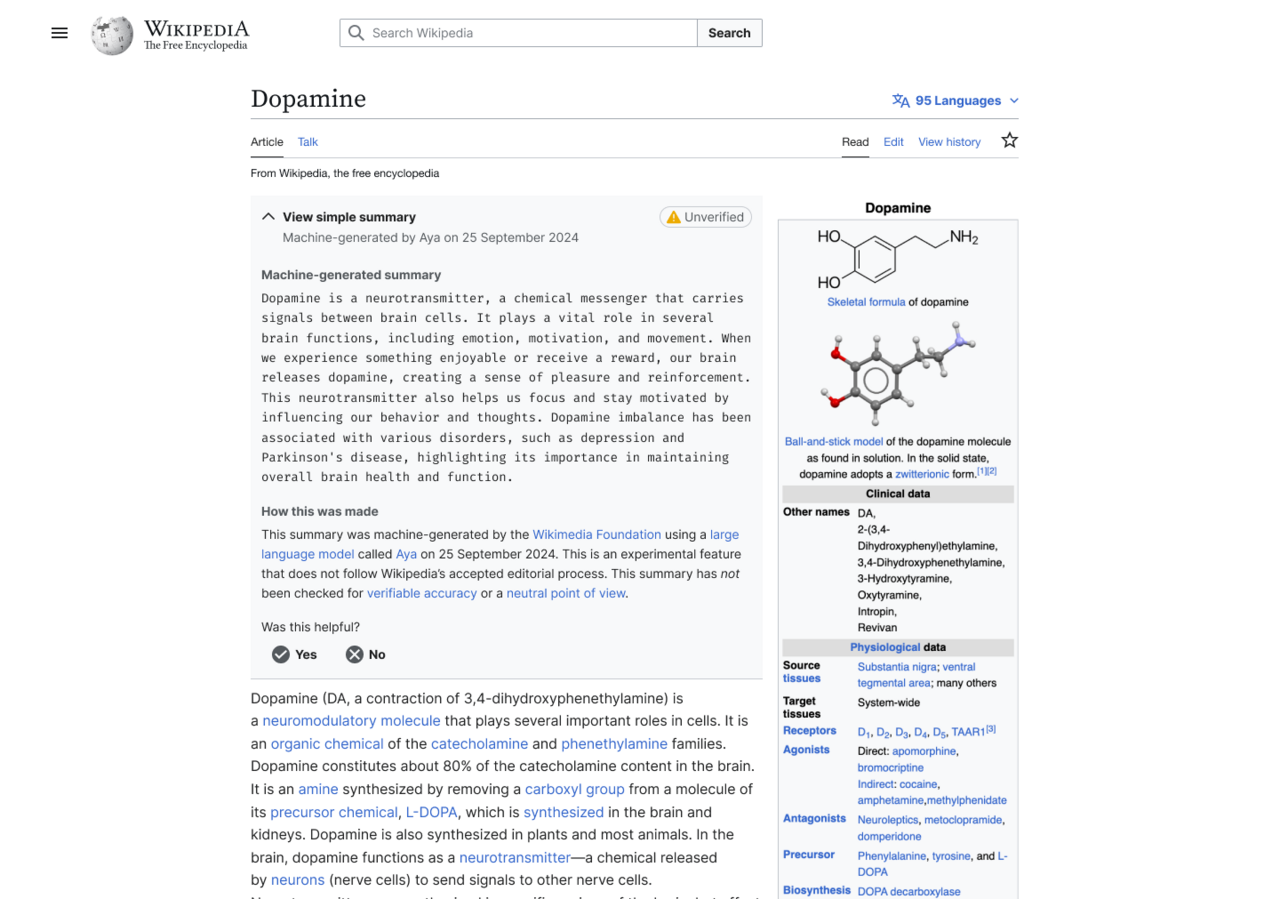

In one experiment where summaries were enabled for users who have the Wikipedia browser extension installed, the generated summary showed up at the top of the article, which users had to click to expand and read. That summary was also flagged with a yellow “unverified” label.

An example of what the AI-generated summary looked like.

Wikimedia announced that it was going to run the generated summaries experiment on June 2, and was immediately met with dozens of replies from editors who said “very bad idea,” “strongest possible oppose,” Absolutely not,” etc.

“Yes, human editors can introduce reliability and NPOV [neutral point-of-view] issues. But as a collective mass, it evens out into a beautiful corpus,” one editor said. “With Simple Article Summaries, you propose giving one singular editor with known reliability and NPOV issues a platform at the very top of any given article, whilst giving zero editorial control to others. It reinforces the idea that Wikipedia cannot be relied on, destroying a decade of policy work. It reinforces the belief that unsourced, charged content can be added, because this platforms it. I don’t think I would feel comfortable contributing to an encyclopedia like this. No other community has mastered collaboration to such a wondrous extent, and this would throw that away.”

A day later, Wikimedia announced that it would pause the launch of the experiment, but indicated that it’s still interested in AI-generated summaries.

“The Wikimedia Foundation has been exploring ways to make Wikipedia and other Wikimedia projects more accessible to readers globally,” a Wikimedia Foundation spokesperson told me in an email. “This two-week, opt-in experiment was focused on making complex Wikipedia articles more accessible to people with different reading levels. For the purposes of this experiment, the summaries were generated by an open-weight Aya model by Cohere. It was meant to gauge interest in a feature like this, and to help us think about the right kind of community moderation systems to ensure humans remain central to deciding what information is shown on Wikipedia.”

“It is common to receive a variety of feedback from volunteers, and we incorporate it in our decisions, and sometimes change course,” the Wikimedia Foundation spokesperson added. “We welcome such thoughtful feedback — this is what continues to make Wikipedia a truly collaborative platform of human knowledge.”

“Reading through the comments, it’s clear we could have done a better job introducing this idea and opening up the conversation here on VPT back in March,” a Wikimedia Foundation project manager said. VPT, or “village pump technical,” is where The Wikimedia Foundation and the community discuss technical aspects of the platform. “As internet usage changes over time, we are trying to discover new ways to help new generations learn from Wikipedia to sustain our movement into the future. In consequence, we need to figure out how we can experiment in safe ways that are appropriate for readers and the Wikimedia community. Looking back, we realize the next step with this message should have been to provide more of that context for you all and to make the space for folks to engage further.”

The project manager also said that “Bringing generative AI into the Wikipedia reading experience is a serious set of decisions, with important implications, and we intend to treat it as such, and that “We do not have any plans for bringing a summary feature to the wikis without editor involvement. An editor moderation workflow is required under any circumstances, both for this idea, as well as any future idea around AI summarized or adapted content.”

Why is it so damned hard for coporate to understand most people have no use nor need for ai at all?

“It is difficult to get a man to understand something, when his salary depends on his not understanding it.”

— Upton Sinclair

Wikipedia management shouldn’t be under that pressure. There’s no profit motive to enshittify or replace human contributions. They’re funded by donations from users, so their top priority should be giving users what they want, not attracting bubble-chasing venture capital.

It pains me to argue this point, but are you sure there isn’t a legitimate use case just this once? The text says that this was aimed at making Wikipedia more accessible to less advanced readers, like (I assume) people whose first language is not English. Judging by the screenshot they’re also being fully transparent about it. I don’t know if this is actually a good idea but it seems the least objectionable use of generative AI I’ve seen so far.

Considering ai uses llms and more often than not mixes metaphors, it just seems to me that the wkimedia foundation is asking for misinformation to be published unless there are humans to fact check it

One of the biggest changes for a nonprofit like Wikipedia is to find cheap/free labor that administration trusts.

AI “solves” this problem by lowering your standard of quality and dramatically increasing your capacity for throughput.

It is a seductive trade. Especially for a techno-libertarian like Jimmy Wales.

Why the hell would we need AI summaries of a wikipedia article? The top of the article is explicitly the summary of the rest of the article.

Even beyond that, the “complex” language they claim is confusing is the whole point of Wikipedia. Neutral, precise language that describes matters accurately for laymen. There are links to every unusual or complex related subject and even individual words in all the articles.

I find it disturbing that a major share of the userbase is supposedly unable to process the information provided in this format, and needs it dumbed down even further. Wikipedia is already the summarized and simplified version of many topics.

There’s also a “simple english” Wikipedia: simple.wikipedia.org

Ho come on it’s not that simple. Add to that the language barrier. And in general precise language and accuracy are not making knowledge more available to laymen. Laymen don’t have to vocabulary to start with, that’s pretty much the definition of being a layman.

There is definitely value in dumbing down knowledge, that’s the point of education.

Now using AI or pushing guidelines for editors to do it that’s entirely different discussion…

They have that already: simple.wikipedia.org

The vocabulary is part of the knowledge. The concept goes with the word, that’s how human brains understand stuff mostly.

You can click on the terms you don’t know to learn about them.

You can click on the terms you don’t know to learn about them.

This is what makes Wikipedia special. Not the fact that it is a giant encyclopedia, but that you can quickly and logically work your way through a complex subject at your pace and level of understanding. Reading about elements but don’t know what a proton is? Guess what, there’s a link right fucking there!

I’m so tired of “AI”. I’m tired of people who don’t understand it expecting it to be magical and error free. I’m tired of grifters trying to sell it like snake oil. I’m tired of capitalist assholes drooling over the idea of firing all that pesky labor and replacing them with machines. (You can be twice as productive with AI! But you will neither get paid twice as much nor work half as many hours. I’ll keep all the gains.). I’m tired of the industrial scale theft that apologists want to give a pass to while individuals who torrent can still get in trouble, and libraries are chronically under funded.

It’s just all bad, and I’m so tired of feeling like so many people are just not getting it.

I hope wikipedia never adopts this stupid AI Summary project.

People not getting things that seem obvious is an ongoing theme, it seems. We sat through a presentation at work by some guy who enthusiastically pitched AI to the masses. I don’t mean that’s what he did, I mean “enthusiasm” seemed to be his ONLY qualification. Aside from telling folks what buttons to press on the company’s AI app, he didn’t know SHIT. And the VP got on before and after and it was apparent that he didn’t know shit, either. Someone is whispering in these people’s ears and they’re writing fat checks, no doubt, and they haven’t a clue what an LLM is, what it is good at, nor what to be wary of. Absolutely ridiculous.

I know one study found that 51% of summaries that AI produced for them contained significant errors. So AI-summaries are bad news for anyone who hopes to be well informed. source https://www.bbc.com/news/articles/c0m17d8827ko

Wrong community, please repost to the community for Onion articles.

when wikipedia starts to publish ai generated content it will no longer be serving its purpose and it won’t need to exist anymore

Too late.

With thresholds calibrated to achieve a 1% false positive rate on pre-GPT-3.5 articles, detectors flag over 5% of newly created English Wikipedia articles as AI-generated, with lower percentages for German, French, and Italian articles. Flagged Wikipedia articles are typically of lower quality and are often self-promotional or partial towards a specific viewpoint on controversial topics.

Human posting of AI-generated content is definitely a problem; but ultimately that’s a moderation problem that can be solved, which is quite different from AI-generated content being put forward by the platform itself. There wasn’t necessarily anything stopping people from doing the same thing pre-GPT, it’s just easier and more prevalent now.

Human posting of AI-generated content is definitely a problem

It isn’t clear whether this content is posted by humans or by AI fueled bot accounts. All they’re sifting for is text with patterns common to AI text generation tools.

There wasn’t necessarily anything stopping people from doing the same thing pre-GPT

The big inhibiting factor was effort. ChatGPT produces long form text far faster than humans and in a form less easy to identify than prior Markov Chains.

The fear is that Wikipedia will be swamped with slop content. Humans won’t be able to keep up with the work of cleaning it out.

Good! I was considering stopping my monthly donation. They better kill the entire “machine-generated” nonsense instead of just pausing, or I will stop my pledge!

Good! I was considering stopping my monthly donation.

Ditto. I don’t want to overreact, but it’s not a good look.